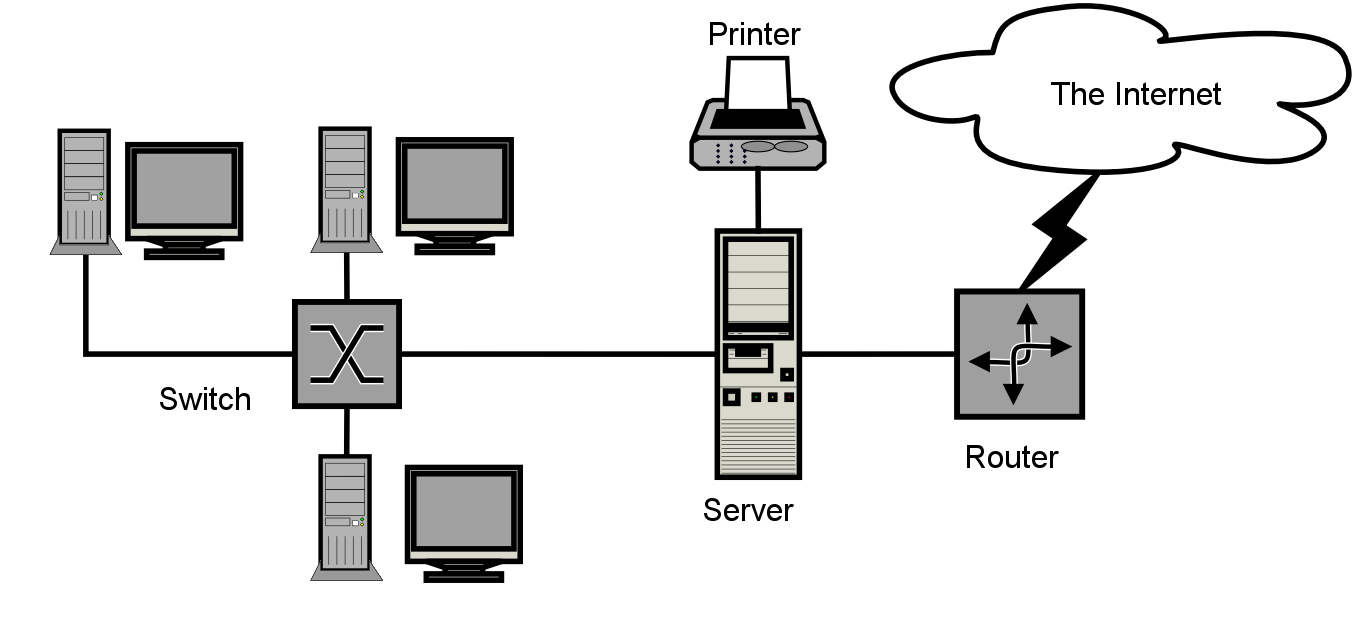

In its simplest terms, cloud computing means your computing requirements are running on someone else’s hardware. The word cloud computing originated back in the 1960’s when the internet was often depicted as a cloud when an engineer drew a diagram to represent the internet. You have probably have seen something like this before.

The

cloud signified that there was some entity out there, in this case,

the internet, that you did not need to worry about or understand

the details on how it works – it’s just there for your use.

Therefore, cloud computing is a similar concept. However, instead

of just connecting to the internet, you connect to services

available over the internet like servers, storage, software,

networks, databases, applications, etc. It has become a commodity

like the electricity and water that is delivered to your home. We

don’t need to understand how the water and electricity get to our

house – we simply enjoy the fact that it is there and someone else

has made it all possible. It makes life simpler.

The

cloud signified that there was some entity out there, in this case,

the internet, that you did not need to worry about or understand

the details on how it works – it’s just there for your use.

Therefore, cloud computing is a similar concept. However, instead

of just connecting to the internet, you connect to services

available over the internet like servers, storage, software,

networks, databases, applications, etc. It has become a commodity

like the electricity and water that is delivered to your home. We

don’t need to understand how the water and electricity get to our

house – we simply enjoy the fact that it is there and someone else

has made it all possible. It makes life simpler.

So if the cloud makes life simpler for those that want to access its many resources, why does it appear to be so complicated? There are many different ways to utilize the cloud, and it is those use cases that give rise to a whole plethora of various cloud services. The future growth of cloud computing is going to increase your choices. The type of cloud service you decide to choose depends on what you are trying to accomplish. So what are the common cloud services we see today?

The first thing to consider when thinking about different types of cloud services is where is the cloud located and who can access it. From that point of view they are three main types of cloud services:

A public cloud exists because some business enterprise decided it would be a great idea to build an entire computing infrastructure that it could make available to the public for their own use. They paid all the upfront capital costs, hired the resources to manage everything, and guarantee the service – to a certain level of uptime. All you have to do is pay for what you decide to use. Sound similar? That’s how your electric or water company works, and the same principle applies to supplying the utility of computing resources. Almost everyone has heard of AWS, GCP, and Azure, but there are lots of players in this space. We have done some exciting work on a public cloud infrastructure that was specifically created to address the computing requirements of government agencies.

Like the public cloud, a private cloud is designed to provide computing utility. However, the public cloud is intended to serve only one customer or organization. In the past, many of these private clouds were located within the walls of the organization. In other words, hosted internally. With the explosion in cloud industry growth, organizations can host externally, but still have the cloud dedicated to their needs. The reason for choosing private over public is based on the level of security and control the business wants over its computing resources. Using our electric utility example again: a homeowner could decide to live off the electric grid by providing their own solar panels, wind generators, gas generators, etc. Initially, this would be expensive, but it would give them total control of their power generation and protect them if the entire power grid collapsed. Why you chose one cloud over the other depends on your business and budget requirements.

A hybrid is a combination of two elements, so therefore a Hybrid Cloud is a combination of a public and private cloud. In a way, it is like the best of both worlds. There may be specific applications of your business that need to be secured privately and others that don’t require that level of control. For example – a company may have a customer-facing website that needs to run 24 x 7 without much supervision. Therefore, that application could efficiently run on a public cloud, but your data that controls all aspects of your business could be stored in your private cloud. Back to our electric utility analogy. Instead of building all the capacity to live entirely off the electrical grid you could buy a large generator. If one source of electricity fails, you have the option of using the other to keep the lights on. Again, it comes back to business requirements and budget.

To further break down the types of cloud you also need to consider how the cloud is being used and not just where it is located and who has access. Therefore it will be useful to understand the following four classifications:

If you plan to purchase computing services on a pay per use basis, then you want IaaS. That means you will use the servers, network, storage, etc., in someone else’s data center. This gives you the flexibility to pay for what you use, and there are no capital costs. Essentially, all your expenses are operating costs. If you need more resources as you scale, you buy more. If you scale down, you shut services down. What this amounts to is that you are renting hardware and paying for the share you use. You also have the flexibility to configure how you use these underlying resources in any manner you see fit, just as is if they were your own. AWS is a popular example of this type of service.

As the name implies, PaaS does not only provide the hardware, but it also includes the software and other services you need to manage the entire lifecycle of building your software applications. PaaS is a step up in the technology stack because with PaaS the hardware and software are preconfigured for you to become productivity immediately. If you are a software developer, this saves you lots of hassle because you can begin churning out code quickly because all the tools you need are available on demand. Under this model, you also pay for what you use, so it’s cost effective to get a quick start and avoid capital costs and setup delays. However, this is all provided with some trade-off with flexibility and control. Salesforce’s Force.com and Google App Engine are two common examples of this type of service.

Moving even further up the technology stack is SaaS. At

this point, all you want is to use some software tool and have it

work. You don’t want to own anything and merely pay a subscription.

End-users typically use SaaS applications whereas software

developers tend to use PaaS, and system administrators tend to use

IaaS. Notice the trend? The higher up in the stack you go, the less

you need to understand how everything works. SaaS is an immensely

crowded space, and the SaaS applications out there appear to be

endless. In elementary terms, if you are using a browser to run

software it’s most likely a SaaS application. When you open your

Gmail (G-Suite) account – you are using SaaS. If you

are running Office 365 – hello SaaS. CRMs, ERPs, HRMs, and

a vast host of business applications rely on SaaS to deliver their

applications. As an end-user the SaaS application is always up to

date, your data is in the cloud and backed up, and support is a

phone call or mouse click away.

Moving even further up the technology stack is SaaS. At

this point, all you want is to use some software tool and have it

work. You don’t want to own anything and merely pay a subscription.

End-users typically use SaaS applications whereas software

developers tend to use PaaS, and system administrators tend to use

IaaS. Notice the trend? The higher up in the stack you go, the less

you need to understand how everything works. SaaS is an immensely

crowded space, and the SaaS applications out there appear to be

endless. In elementary terms, if you are using a browser to run

software it’s most likely a SaaS application. When you open your

Gmail (G-Suite) account – you are using SaaS. If you

are running Office 365 – hello SaaS. CRMs, ERPs, HRMs, and

a vast host of business applications rely on SaaS to deliver their

applications. As an end-user the SaaS application is always up to

date, your data is in the cloud and backed up, and support is a

phone call or mouse click away.

XaaS is a general term to refer to anything you may want to deliver as a service. There is a long list of what X equals which include Security, Functions, DevOps, Storage, Database, Analytics, etc. These names are trying to stay in line with the most recent cloud computing marketing trends. The general idea is that if you want something, there should be a way to deliver that as a service over the cloud. It’s the ultimate in modern convenience. You don’t need to own anything, but if you want it – it is there for you for as long as you need it – as long as you are willing to pay the usage fee.

Cloud computing spending has been growing at 4.5 times the rate of IT spending since 2009 and is expected to grow at better than six times the rate of IT spending from 2015 through 20201. These amazing cloud computing statistics reveal that the future is very bright for cloud computing and the trend has no signs of slowing down. So what were the trends we’re experiencing and what do we need to watch for in the future?

We are huge fans of Docker, and we are not one bit surprised that containerization was driving a lot of activity towards the cloud. Software companies have been quickly moving towards adopting modern DevOps practices to accelerate the speed at which they can deploy their SaaS applications. Containers allow software developers to break up monolithic software applications into much smaller code segments called containers which can run on any hardware. Why is this a good thing? Since the container houses the application and the entire operating environment the container is very portable and can be deployed quickly and easily. Using a tool like Kubernetes facilitates the ease of use of containers. We have helped many companies move toward using containerization in practice and accelerate the speed at which they can deploy software.

It was not that long ago that CFOs were struggling to understand their telecom bill and telecom companies were not doing much to make it easier. Itemized telecom bills that ran on for hundreds, if not thousands of pages, were the norm. Fast forward to today, and that problem now exists with cloud computing vendors. As customers demand more flexible pricing options and cloud services, the complexity of managing these costs has expanded as well. Complicating this even further is the fact that you can purchase cloud computing resources on demand in time cycles of a fraction of a second. Unless there is strict cost control in place by the CIO, these cloud resources can be consumed easily without supervision. Therefore, as companies expand their cloud strategies, they are looking very carefully at how to maintain costs across the many different cloud platforms.

As CIOs look for ways to simplify their operations, an obvious choice has been to eliminate the data center. There is no strategic value to building a data center internally and managing the complexity of that environment. Focusing on the computing and storage requirements of the business creates enough worry. Also, colocation acts as a stepping stone to full cloud migration and helps those customers that opt to build a hybrid cloud infrastructure service their business needs.

Many of the customers we service seem to adopt a hybrid cloud approach. If considered thoughtfully, a CIO can minimize their costs and maximize their security, using a hybrid approach. Raw compute capacity can easily be deployed to the cloud and storage can be kept in a colocation facility or on-premise. The challenge here is to build the software so that it can easily talk across these different platforms. Adopting a Service Oriented Architecture (SOA) that relies on APIs is something we assist clients with every day to allow them to take advantage of Hybridization.

As businesses strive to reduce costs and automate

decision making they turn to AI and Machine Learning to provide the

benefits. The trend in the past was to think of the cloud as an

off-site place to process and store data. However, AI and machine

learning have turned the cloud from an engine to a brain. The

phase, intelligent cloud, means the processing and data management

capabilities of the cloud are trying to mimic human intelligence.

The cloud can now hear your words (Alexa,

Google Home), speak to you (Siri, Alexa),

look at and understand your text and pictures (Google DeepMind,

Google

Photos), or drive your car (Waymo). In the future, you won’t hire an

expert. You will send your data to the cloud and get your expert

opinion through an API.

As businesses strive to reduce costs and automate

decision making they turn to AI and Machine Learning to provide the

benefits. The trend in the past was to think of the cloud as an

off-site place to process and store data. However, AI and machine

learning have turned the cloud from an engine to a brain. The

phase, intelligent cloud, means the processing and data management

capabilities of the cloud are trying to mimic human intelligence.

The cloud can now hear your words (Alexa,

Google Home), speak to you (Siri, Alexa),

look at and understand your text and pictures (Google DeepMind,

Google

Photos), or drive your car (Waymo). In the future, you won’t hire an

expert. You will send your data to the cloud and get your expert

opinion through an API.

So what are the future trends of cloud computing? Here are some of the trends to watch out for.

There is this new mentality that if you are going to build a device, it needs to connect to the cloud. Why not? It’s so easy to do because your device can rely on the cloud to do all the heavy lifting. So your home thermostat doesn’t just set the temperature in your house, it decides what the temperature should be to make you most comfortable and save you money (Nest). Dexcom allows you to get your blood sugar readings continuously. These are just two of many examples of the trend to having devices store, process, share, and interconnect, over the cloud. This trend is spawning tremendous cloud adoption as is evidenced in recent cloud computing statistics. As the 80’s band Devo should have sung, “When a problem comes along, IOT will fix it.” According to Gartner, the number of IoT devices in the world is expected to cross 20 billion by 2020. That’s more than two devices for every person on the planet.

The DevOps trend is not slowing down, and containers are accelerating the rate at which software can be deployed. If you are familiar with Google’s Cloud Platform, then you already know that everything at Google runs on containers. Containerization is one of the most sought-after services we provide because every software company is challenged with the same problem – the acceleration of IT. Everyone wants it faster, cheaper, and better. That’s the value of DevOps.

The human race is creating data at an alarming rate,

and that trend is not slowing down. If you want a chuckle on how

alarming, listen to Jim

Gaffigan explain his perception of this trend as it relates to

taking photos.The current level of global capacity for cloud

storage is 600 EB (Exabytes). This is a quintillion bytes

(1018) or one billion gigabytes. That number is expected

to quickly grow to 1.1 ZBs (Zettabytes) by the end of the year.

That’s a sextillion bytes (1021) or one trillion

gigabytes. Every line of code, picture, email, attachment, song,

video, etc. ends up in the cloud for storage. This has driven the

cost of storage down to historic lows which is spawning greater

demand. Remember before the year 2000 when programmers decided not to

store the extra two digits in the year to save memory? Those days

are over, the perception by most people is that storage is free,

but it’s not, and it adds up, and cloud storage vendors are

capitalizing on this trend. The last time I checked I think I was

spending over $28 per month to Apple, Google and Spotify to store

my stuff.

The human race is creating data at an alarming rate,

and that trend is not slowing down. If you want a chuckle on how

alarming, listen to Jim

Gaffigan explain his perception of this trend as it relates to

taking photos.The current level of global capacity for cloud

storage is 600 EB (Exabytes). This is a quintillion bytes

(1018) or one billion gigabytes. That number is expected

to quickly grow to 1.1 ZBs (Zettabytes) by the end of the year.

That’s a sextillion bytes (1021) or one trillion

gigabytes. Every line of code, picture, email, attachment, song,

video, etc. ends up in the cloud for storage. This has driven the

cost of storage down to historic lows which is spawning greater

demand. Remember before the year 2000 when programmers decided not to

store the extra two digits in the year to save memory? Those days

are over, the perception by most people is that storage is free,

but it’s not, and it adds up, and cloud storage vendors are

capitalizing on this trend. The last time I checked I think I was

spending over $28 per month to Apple, Google and Spotify to store

my stuff.

Security is a primary concern of every corporation considering a move to the cloud. There is a predominant mentality that if I can see the blinking lights in my data center, it’s more secure than a server somewhere else. There is a perceived tangible loss of control. There is an excellent opportunity for solution providers to provide tools and services that extend the security capabilities beyond what the companies can provide for themselves. To stay ahead of hackers and other nefarious operators businesses need to invest in security and this trend will continue. At FP Complete, we build security into the development, deployment, and systems operations stages of the software product life-cycle. Designing for security is the least expensive way to tackle cloud security.

As a society, we have been programming computers since the 1940s when assembly language programs were written to run the first electronic computers. Every line of code ever written has been done as a step to accomplish some task. Over time, many of these tasks have become repetitive and therefore reusable and interchangeable. There is so much code out in the ether now that computer programming has become more an exercise of finding preexisting functions and code modules to build your program. If you want to sort 10 billion numbers, there is a program or function that already performs this task. Serverless computing is the evolution of all these years of coding. If you want your program to perform a specific task in the future, you will connect to that task, which is hosted in the cloud, and get your results. The ease of using these tools will also help grow the many use cases for cloud computing.

Navigating today’s IT landscape is certainly not for the faint of heart. Our clients rely on us to understand how to best utilize cloud computing resources based on their business requirements.

Subscribe to our blog via email

Email subscriptions come from our Atom feed and are handled by Blogtrottr. You will only receive notifications of blog posts, and can unsubscribe any time.