Hello! My name is Chris Allen and I’m going to use a tiny Rust app to demonstrate deploying Rust with Docker and Kubernetes. Rust and Haskell apps are deployed in similar ways. Much of this is because the compilers for both languages generate native binary executables.

Here are the technologies we’ll be using and why:

First, here’s our Rust application:

extern crate futures;

extern crate telegram_bot;

extern crate tokio_core;

use std::env;

use futures::Stream;

use tokio_core::reactor::Core;

use telegram_bot::*;

fn main() {

let mut core = Core::new().unwrap();

let token = env::var("TELEGRAM_BOT_TOKEN").unwrap();

let api = Api::configure(token).build(core.handle()).unwrap();

// Fetch new updates via long poll method

let future = api.stream().for_each(|update| {

// If the received update contains a new message...

if let UpdateKind::Message(message) = update.kind {

if let MessageKind::Text {ref data, ..} = message.kind {

// Print received text message to stdout.

println!("<{}>: {}", &message.from.first_name, data);

// Answer message with "Hi".

api.spawn(message.text_reply(

format!("Hi, {}! You just wrote '{}'", &message.from.first_name, data)

));

}

}

Ok(())

});

core.run(future).unwrap();

}

This is identical to the lovely example provided by the telegram-bot library. All it does is run a Telegram bot which repeats back what you said.

To build this locally for development purposes, you would run:

cargo build

in your terminal. You’ll also need the Cargo.toml

file which specifies the project dependencies. The source code

above (the main module) needs to be placed in a file located at

src/main.rs. You can see how these are setup at:

https://gitlab.com/bitemyapp/totto/

To run this application and see if it works locally, you will first need to have a Telegram account. Once you’re on Telegram, you’ll want to talk to the botfather to get an API token for your instance of Totto. From there, you could run the application on MacOS or Linux by doing the following:

export TELEGRAM_BOT_TOKEN=my_token_I_got_from_botfather

cargo run totto

The name of the application after cargo run should

match the name specified in the

Cargo.toml: https://gitlab.com/bitemyapp/totto/blob/master/Cargo.toml#L2

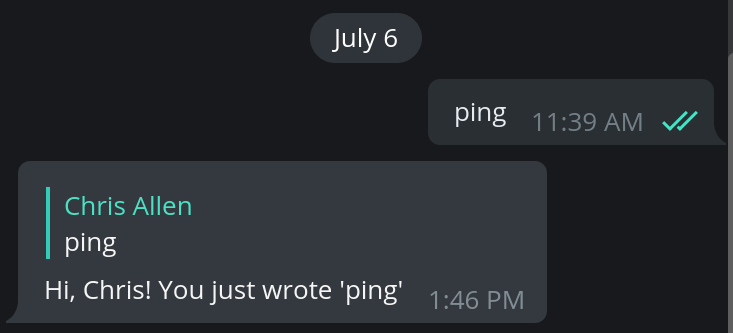

If you direct message the bot the word “ping”, it’ll reply with:

Hi, #{your_name}! You just wrote ‘ping’

as a Telegram reply to your message, where

your_name is whatever your first name on Telegram

is.

One thing I wanted to demonstrate was building a conventional runtime container environment for a Rust application as well as a minimal one that could be as small as possible.

For a more conventional Docker environment, you could look at this Dockerfile:

FROM rust@sha256:1cdce1c7208150f065dac04b580ab8363a03cff7ddb745ddc2659d58dbc12ea8 as build

COPY ./ ./

RUN cargo build --release

RUN mkdir -p /build-out

RUN cp target/release/totto /build-out/

# Ubuntu 18.04

FROM ubuntu@sha256:5f4bdc3467537cbbe563e80db2c3ec95d548a9145d64453b06939c4592d67b6d

ENV DEBIAN_FRONTEND=noninteractive

RUN apt-get update && apt-get -y install ca-certificates libssl-dev && rm -rf /var/lib/apt/lists/*

COPY --from=build /build-out/totto /

CMD /totto

We’re pinning the SHA256 hash of the rust and

ubuntu images to improve the reproducibility of the

Docker build. You can find the Rust image here. We’re also

taking advantage of intermediate containers to separate the needs

of the build environment from that of the runtime environment. We

don’t want to carry around the compiler and the build artifacts in

our deployment image! To learn more about this approach, please see

Deni

Bertovic’s post on building Haskell apps with Docker.

There are two main things we need in the runtime environment for our Rust application to function:

To furnish these requirements, we have:

RUN apt-get update && apt-get -y install ca-certificates libssl-dev && rm -rf /var/lib/apt/lists/*

We install libssl-dev because the default Rust

build for this application will dynamically link OpenSSL. The HTTP

client’s TLS support requires OpenSSL. Because this dependency is

dynamically linked, we have to ensure libssl-dev is

installed in the runtime environment. Many developers will eschew

using a Docker image like ubuntu:18.04 in favor of

using alpine or scratch. I recommend

starting with ubuntu unless you have a demonstrable

need for leaner Docker images. Ubuntu’s Docker images provide a

fairly conventional Linux environment and it can be much quicker to

get the environment configured correctly for production use.

However, since I know people will want the lean version, I also

have…

For the fun of it, we’ll use scratch instead of

Alpine even though Alpine is more common for minimal

applications.

Alpine is a very minimal Linux distribution

designed for maximally-small Docker images. If you want to see

what’s included in Alpine,

here’s an example Dockerfile. If you’re curious what comes with

the image, you can decompress rootfs.tar.xz on your

computer.

scratch is a baseline Docker image that contains

nothing. It’s the basis of Docker distributions like Alpine. If

you’re willing to sort out all of your dependencies yourself, you

can use scratch for your deployments.

Here’s our Dockerfile for the static binary:

FROM yasuyuky/rust-ssl-static@sha256:3df2c8949e910452ee09a5bcb121fada9790251f4208c6fd97bb09d20542f188 as build

COPY ./ ./

ENV DEBIAN_FRONTEND=noninteractive

RUN apt-get update && apt-get -y install ca-certificates libssl-dev && rm -rf /var/lib/apt/lists/*

ENV PKG_CONFIG_ALLOW_CROSS=1

RUN cargo build --target x86_64-unknown-linux-musl --release

RUN mkdir -p /build-out

RUN cp target/x86_64-unknown-linux-musl/release/totto /build-out/

RUN ls /build-out/

FROM scratch

COPY --from=build /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/ca-certificates.crt

COPY --from=build /build-out/totto /

ENV SSL_CERT_FILE=/etc/ssl/certs/ca-certificates.crt

ENV SSL_CERT_DIR=/etc/ssl/certs

CMD ["/totto"]

This is similar structurally to our dynamically linked Docker

build and runtime environment. Some differences are that we’re

using yasuyuky’s rust-ssl-static image for the build

image and scratch for the most minimal possible

runtime environment. The TLS/SSL support gets linked into the

binary at compile time, so we no longer need it to exist as a

separate library in the runtime environment.

ENV PKG_CONFIG_ALLOW_CROSS=1 sets the environment

variable for the cargo build command that follows.

If you get an error like this:

$ docker run -e TELEGRAM_BOT_TOKEN registry.gitlab.com/bitemyapp/totto:latest

docker: Error response from daemon: OCI runtime create failed: container_linux.go:348: starting container process caused "exec: "/bin/sh": stat /bin/sh: no such file or directory": unknown.

ERRO[0000] error waiting for container: context canceled

You might be invoking the command or entrypoint incorrectly.

scratch, unlike alpine, doesn’t have

anything inside of it. Including /bin/sh! Accordingly,

your command must be exec based:

CMD ["/totto"]

and not shell based:

CMD /totto

because there is no shell in scratch unless you

copy one into the environment. To handle setting environment

variables you can use the Dockerfile ENV command as we

did above.

For this section I’ll assume you’re using kubectl and that

you’ve already set your KUBECONFIG environment

variable to one pointed at a cluster you have access to. Our

Kubernetes deployment specification for this app looks like

this:

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: totto

spec:

replicas: 1

minReadySeconds: 5

strategy:

type: Recreate

template:

metadata:

labels:

app: totto

spec:

containers:

- name: totto

image: registry.gitlab.com/bitemyapp/totto:latest

imagePullPolicy: Always

env:

- name: TELEGRAM_BOT_TOKEN

valueFrom:

secretKeyRef:

name: totto-telegram-token

key: totto-token

resources:

requests:

cpu: 10m

memory: 10M

limits:

cpu: 20m

memory: 20M

Make sure image: points to a registry accessible to

your Kubernetes cluster. I used GitLab’s registry for my public

repository because it requires no authentication. If you’d like to

set up pulling images from a private registry please see the

Kubernetes documentation on this:

https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

Always set your resources: for your applications

deployed on Kubernetes! Kubernetes can’t do a good job of managing

resource allocations without this. Often your nodes will get

over-subscribed if you have applications deployed with resource

limits.

The resource limits are set fairly low because our Rust application won’t need much. This isn’t too unusual as many odd-and-end task worker apps in a production environment will have similar resource utilization. Being able to thin-slice and right-size resource allocation for your applications is where much of the operational expense savings come from when you use Kubernetes, even if you’re already operating in a cloud environment like AWS. AWS doesn’t offer EC2 instances w/ 20 thousandths of a CPU and 20 megabytes of RAM!

Applying the deployment spec to the Kubernetes cluster and triggering deployment of the image:

$ kubectl apply -f etc/kubernetes/totto.yaml

deployment "totto" configured

$ kubectl rollout status -f etc/kubernetes/totto.yaml

Waiting for rollout to finish: 0 of 1 updated replicas are available...

deployment "totto" successfully rolled out

Okay, the application is probably deployed and running now if you see this, but I will talk about configuration and some errors I ran into now.

Fortunately, configuring this Telegram bot application is pretty simple! Our application relies on being able to get the Telegram bot API token from the environment variables in this line of code:

let token = env::var("TELEGRAM_BOT_TOKEN").unwrap();

Accordingly, we’ll need to ensure the

TELEGRAM_BOT_TOKEN environment variable is set for our

Docker containers in production. You may recall this section from

the pod spec:

env:

- name: TELEGRAM_BOT_TOKEN

valueFrom:

secretKeyRef:

name: totto-telegram-token

key: totto-token

To make this work, I used the following kubectl command to create the secret:

kubectl create secret generic totto-telegram-token --from-file=/home/callen/Secrets/totto-token

Here name: was the secret name and

key: was the filename provided in

from-file=. The contents of the

totto-token were simply the Telegram API token and

nothing extra. With this, the environment should be set correctly

for the Docker container when it runs in the Kubernetes

cluster.

The developers of Kubernetes do not currently support automatically re-pulling the same image and tag, and are unlikely to add such support in the future.

You’ll want to make a more dynamic image tagging setup for a real project. Kubernetes won’t assume a particular image identified by an image name and tag has “mutated” since it last pulled. One way to solve this problem is to have your Makefile append a build identifier onto the image name:

export CI_REGISTRY_IMAGE ?= registry.gitlab.fpcomplete.com/chrisallen/totto

export CI_PIPELINE_ID ?= $(shell date +"%Y-%m-%d-%s")

export DOCKER_IMAGE_CURRENT ?= ${CI_REGISTRY_IMAGE}:${CI_BUILD_LIB_TYPE}_${CI_BUILD_REF_SLUG}_${CI_PIPELINE_ID}

For convenience and to keep this demonstration simple, I used a single image tag:

export FPCO_CI_REGISTRY_IMAGE ?= registry.gitlab.fpcomplete.com/chrisallen/totto

export CI_REGISTRY_IMAGE ?= registry.gitlab.com/bitemyapp/totto

export FPCO_DOCKER_IMAGE ?= ${CI_REGISTRY_IMAGE}:latest

export DOCKER_IMAGE ?= ${CI_REGISTRY_IMAGE}:latest

These image names are stable and do not change from build to

build. To make deployment rollbacks possible, you really want

varying image names or tags so that you can bump

:latest back to the last known-good deployment. You’re

missing much of the benefit of Docker if you don’t hold onto the

images you’ve deployed.

To work around this for my very simple and temporary app I was

trying to deploy, I would fiddle with a variable in my pod spec

before each kubectl apply. An option for dealing with

this is to use helm for templating your

pod specs. Another hacky solution for when you’re just testing a

deployment is to kubectl delete your deployment and

then re-apply.

I ran into some problems while I was figuring out how to deploy

this Telegram bot as a static binary under the Docker

scratch environment. To interrogate the pod after I

applied the pod spec I used kubectl rollout status -f

etc/kubernetes/totto.yaml to monitor the deployment in one

terminal. When I noticed it didn’t wrap up after about 15 seconds,

I did the following in another terminal:

$ kubectl get pods

[... listing of the pods and their status, I noticed there was a crash loop for Totto ...]

$ kubectl describe pod totto-2950502675-3x2nk

[... some more detailed information ...]

$ kubectl logs totto-2950502675-3x2nk

thread 'main' panicked at 'called `Result::unwrap()` on an `Err` value: Error(Hyper(Io(Custom { kind: Other, error: Ssl(ErrorStack([Error { code: 336134278, library: "SSL routines", function: "ssl3_get_server_certificate", reason: "certificate verify failed", file: "s3_clnt.c", line: 1264 }])) })), State { next_error: None, backtrace: None })', libcore/result.rs:945:5

note: Run with `RUST_BACKTRACE=1` for a backtrace.

Okay so the problem was that the scratch image

really is empty out of the box. This means it doesn’t have any

trusted CA certificates pre-installed in the environment.

Cf. https://github.com/japaric/cross/issues/119

In order for our bot to work, the HTTP client underlying the

Rust telegram bot library needs to be able to trust

https://api.telegram.org. To solve this, I took

advantage of the fact that we had a larger intermediate image based

on Ubuntu Xenial and copied over the CA certificates into our

scratch based runtime image:

COPY --from=build /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/ca-certificates.crt

Note that I used the ENV directives and the exec

form of CMD:

ENV SSL_CERT_FILE=/etc/ssl/certs/ca-certificates.crt

ENV SSL_CERT_DIR=/etc/ssl/certs

CMD ["/totto"]

The exec form expects solely a path to a

binary:

# Ok

CMD ["/totto"]

# Not ok

CMD ["SSL_CERT_FILE=/etc/ssl/certs/ca-certificates.crt SSL_CERT_DIR=/etc/ssl/certs /totto"]

Final image size for the statically linked binary with the CA

certificates was 10.3 MB according to docker images on

my Linux desktop. This isn’t much larger than the binary was. If

you were feeling cheeky, you could run strip on the

binary before deploying it to make it even smaller but that could

stymie debugging later.

The final source can be found at: https://gitlab.com/bitemyapp/totto

$ kubectl get pods | grep totto

totto-2950502675-zsw3n 1/1 Running 0 42m

Please reach out if you have any questions about Rust, Docker, or Kubernetes. I can be reached at [email protected].

Subscribe to our blog via email

Email subscriptions come from our Atom feed and are handled by Blogtrottr. You will only receive notifications of blog posts, and can unsubscribe any time.